As more and more states are employing algorithms in policing, the dystopian world of The Minority Report might be more of a reality than a sci-fi film.

The use of algorithms in policing is not a new topic. Predpol, a for-profit company pioneering predictive policing algorithms, was a largely controversial issue in 2012, sparking criticisms for racially biased predictions. It uses data from past crimes such as location, type of crime and time to predict where and when crimes are most likely to occur. These predictions are then used to allocate police patrols, leading to the over-policing of low income, minority communities. This over-policing often leads to false arrests and criminalization of minorities, perpetuating the issue of a fast-growing prison population in the country.

Civil rights activists, lawyers, and community organizers have continuously lashed out against the use of algorithms, arguing that algorithms trained on decades of racially biased data will produce racially biased results. Even so, almost every state in the U.S. currently uses algorithms for policing, sentencing, and/or deciding parole. In 2019, Idaho passed a law that required the public release of how the algorithms work and what kinds of data they use, but it was hard for the average citizen to comprehend this complex algorithm.

The most prominent new algorithms, developed by the private company COMPAS as well as UPenn researchers, are ones that categorize people on parole as either low or high risk, measuring how likely the person is to commit a crime in the future. COMPAS does so by taking data such as age, sex, and current and past convictions and comparing it to past offenders. This risk assessment then determines the level of surveillance allocated to that person. This technology was implemented in a few states in 2014 as a test run. The results were that the people labeled as “low risk” and given lower amounts of surveillance were less likely to commit a violent crime than before the implementation of the algorithm. It was the opposite case with people labeled as “high risk”, who were 4 times more likely to commit a violent crime than before.

While the new algorithm-based system benefits “low risk” people, it seems to criminalize the “high risk” people by over-policing them, which perpetuates a cycle where these people would be incarcerated after release. In addition, a study done by Marc Faddoul, a researcher at UC Berkeley focusing on algorithm fairness, shows that these types of risk assessments have a 97 percent rate of getting a false positive, which may be due to how they are often designed to overestimate the risk level as a protective measure. There are also cases where people surveilled due to their risk label are not aware of being labeled as such, especially if the company that makes the algorithm, like COMPAS, doesn’t share the processes of its software. This, in turn, is violating the citizen’s right to due process and has been contested by civil rights lawyers.

On the subject of the algorithms bias towards race, Richard Berk, the developer of the UPenn algorithm, theorizes that the implementation of an algorithm would remove human bias since it would leave all the analysis to data based computations, eliminating the need to understand why or what causes people to commit crimes. According to a study on algorithmic fairness, disregarding the social and historical context of crimes, such as the correlation between poverty, minority communities and high crime rates, is to oversimplify the structural problem of crime, which is turn “reinscribes violence on communities that have already experienced structural violence”.

It seems that these predictive policing and risk assessment algorithms cannot include factors of race, since the data would inevitably be trained on racially biased data, nor can the algorithms ignore the social context of race since all other factors are likely to be incorporated with it. This, added to the dangers of perpetuating a vicious cycle of recriminalization of formerly incarcerated people through the use of risk analysis and surveillance, shows that it would not be safe for algorithms cannot take on a prominent role in the criminal justice system.

A proposed solution by Faddoul is for algorithms to provide judges with “risk probabilities” instead of a label and be a tool for the judge, who will have access to social and contextual knowledge of each case, to “refine assessments instead of raising preemptive red flags”. This method would place trust in judges to be unbiased and would also depend on how judges would perceive the importance of the algorithm results.

Nevertheless, algorithms should not become the judge and jury of the criminal justice system. As of now, they are not advanced enough to address the deep rooted racial discrimination within the criminal justice system, created upon decades of racially biased policing and laws. Using algorithms as tools, as Faddoul proposes, does not solve the problem of bias, since reliance on judges leaves room for human bias. The best solution, as this study proposes, is for the research and development of an algorithm that takes into account the historical biases and works to correct them. Doing so might be a step to correct these biases and work towards a better criminal justice system. If such an algorithm exists, it must also avoid the cycle of recriminalization prevalent in the current justice system. Algorithms like the risk assessment one are seen to over-police those labeled as “high risk” and shows an increase in repeated arrests than before. This method perpetuates the growing problem of mass incarceration and the overcrowding of prisons and jails.

Until an algorithm that fixes existing prejudices and stops the cycle of recriminalization, predictive algorithms should not be used in any aspect of the criminal justice system.

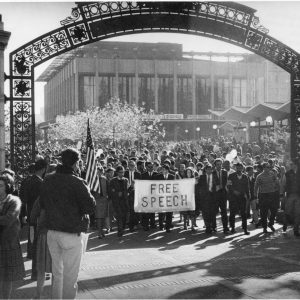

Featured Image source: ACLU.org

Comments are closed.