I paid for lunch today with my face. Well, not really—but I didn’t need cash or a physical debit card, courtesy of Apple’s Tap-To-Pay technology. I didn’t even have to touch the screen; Apple’s incorporation of Facial Recognition Software provides users with unparalleled convenience, allowing us to unlock our phones and use countless features with a single glance. Isn’t that the point of technology? A reflection of the greatest intellectual leaps humankind has ever made, furthering convenience, efficiency, and ease. Certainly, but the utility we receive from wondrous technological advancements is often overshadowed by the problems they usher in—Facial Recognition Software is a prime example of costs that far exceed benefits.

Before analyzing the issues of Facial Recognition Software (FRS), we need to recognize an important contextualization. The overarching theme of this controversy is actually surveillance, not technology. Technology plays a primary role in exploitative surveillance methods, even when problems with the tech don’t exist. Historically, governments and authoritative figures have used surveillance to oppress certain groups of people under the guise of promoting safety and security. The United States practiced surveillance methods in the Philippines in 1898 that compiled personal details from Manila’s inhabitants, methods that would eventually contribute to the 1918 Sedition Act. Specifically, William Howard Taft (governor-general of the Philippine Islands at the time) blackmailed and pressured Filipino leaders with information unjustly collected about political alignments, personal finances, and kinship networks. The 1918 Sedition Act restricted First Amendment rights to free speech, allowing the United States government to persecute those who spoke out against the ongoing war effort. During the peak of Black Lives Matter protests, law enforcement unconstitutionally targeted protestors with high-tech methods such as aerial surveillance and social media monitoring. This trend has only continued with the evolution of technology (new tools to surveil), the most recent of which is the current threat to women’s rights regarding abortions and health care. Many states are desperately trying to require access to health records of women traveling elsewhere so that they may prosecute accordingly—that’s an entirely different issue, though. Facial Recognition Software is one of the more recent tools of surveillance, and to understand its drawbacks, we must first examine its failures.

The failures of Facial Recognition Software are appalling. Take the case of 42-year-old Robert Julian-Borchak Williams, a black Michigan citizen whose routine workday was interrupted in January 2020 with a phone call from Detroit police directing him to turn himself in to be arrested. Upon driving home after brushing the call off as a prank, the innocent man found himself surrounded by police vehicles in his driveway, a shocking sight that escalated into a forceful arrest in front of his wife and children. After being held in prison overnight, Williams faced an interrogation where police presented him with several images of a heavyset black man. After Williams repeated adamantly that the person in the images was not him, police nonchalantly replied: “I guess the computer got it wrong.” Robert Williams’s circumstances are not an anomaly; there have been multiple instances of Facial-Recognition Software misidentification in recent years. The most notable instances of similar circumstances have been the cases of Detroit citizen Michael Oliver and New Jersian Nijeer Parks, both black men. In both cases, these men were misidentified, wrongly arrested, and forced to incur costs in the thousands of dollars. While these three instances may be the most extreme form of FRS failure and harm, there are dozens of other cases that outline the ineffectiveness of FRS, but more importantly the disproportionality of which groups are being affected. Take just one more example—just last year, leading technology companies demonstrated ‘refined’ Facial Recognition Software against crowds of people, but the technology disproportionately and inaccurately identified 28 non-white Congress members as criminals. If law enforcement continues to use this software, court cases may increasingly rely on the whims of a computer rather than true evidence. Surveillance will further evolve into an algorithmic game of roulette in which those who created the game are ‘coincidentally’ the same ones protected, and the ball will nearly always land on Black.

But why is Facial Recognition Software so flawed in the first place? To understand this, we have to understand how the technology works. Here’s how: the system uses biometrics to map facial features from a picture or moving graphic. In most cases, the most analyzed features are the eyes, nose, and jawline. The technology then compares the captured data against a database of available faces until a match is made. Unfortunately, this process isn’t as simple as it sounds. The algorithms that support Facial Recognition Software have much greater failure rates when analyzing Black females than Black males. A recent “Gender Shades” study concluded that “all classifiers performed best for lighter individuals and males overall.” The results were as follows: All classifiers perform better on male faces than female faces (8.1% − 20.6% difference in error rate), all classifiers perform better on lighter faces than darker faces (11.8% − 19.2% difference in error rate), all classifiers perform worst on darker female faces (20.8% − 34.7% error rate). This information still begs the question: why are these disparities emerging from Facial Recognition Software? The answer is multitudinous; firstly, the most simple truth is that these algorithms learn by drawing from facial databases that have higher percentages of white males than any other racial group. Naturally, the algorithms will learn to analyze lighter faces more effectively because they have more practice with these faces.

Secondly, the vast majority of camera settings (including those used by FRS) are not designed to properly render darker skin tones. A basic argument concludes that default camera settings are simply that: default. These arguments are usually supplemented by stating that nothing can be changed about the way cameras detect skin tones, and the presence of light will always result in the above issue. But this defense is blatantly untrue! Kodak created cameras with adjusted settings to better capture Black individuals as early as 1970. And no—this doesn’t come at the cost of reducing capabilities to accurately render lighter faces. The technology necessary to help optimize facial databases not only exists but was created over half a century ago. If it would ever be morally just to allow FRS use by law enforcement, inclusivity and diversity must be incorporated into existing databases, and the algorithms used to analyze said databases must be optimized to render all skin tones equally well.

So what’s being done about the asymmetry of this problem? In light of the various flaws of FRS that cannot be overlooked due to the disproportionality of those groups they affect (Black individuals), San Francisco (among a few other major cities) has taken a stand. In 2019, the city passed one of the most important pieces of legislation concerning the technology. Ordinance 190110 effectively bans the use of FRS by all city departments (including police) except airport security. Should any such city department desire to include the use of Facial-Recognition Software, “a department must obtain Board of Supervisors approval by ordinance of a Surveillance Technology Policy under which the Department will acquire and use Surveillance Technology.” The policy states that this approval must be acquired before seeking funds for facial recognition (including applying for a grant or accepting private donations), acquiring or borrowing surveillance technology, using existing surveillance technology, and even entering an oral or written agreement with an individual or group promising to regularly supply the department with data acquired through Facial Recognition Software. This aspect of the ordinance is arguably the most important for a variety of reasons. Not only will police departments in San Francisco lack funds for this surveillance technology without a lengthy acquisition process, but police will also be unable to incorporate existing technology into a case. More importantly, police departments will also be barred from using a third party or private entity to collect data and information. These restrictions mean that the police department of San Francisco would have no outlet to use Facial Recognition Software for any crime-related purpose. In the past, police departments could have consulted third parties in their efforts to surveil, and in most cases, oppress. The guidelines outlined in this ordinance are a major victory in the fight against this oppressive technology, as it curtails the use of FRS by San Francisco police departments in its entirety.

The rapidly increasing rate of technological development functions as a wellspring; many benefits have consequently sprung forth, but not every draught from that spring is palatable. Essentially, the insatiable human affinity for creation has outpaced a desire to repair—rather than attempting to prune the roots that entwine systemic inequality with aspects of our society, we’ve allowed the roots to grow deeper by creating more innovations that exacerbate those same inequalities. Recently, surveillance has also experienced a massive rise as massive corporations benefiting from data mining have exploited the lucrative goldmine of data collection. The intersection between technology and surveillance has erected a frightening reality where technology reflects the detrimental biases that exist pervasively within society. The entire situation is somewhat controversial because Facial Recognition Software has proven to be helpful in the past. Specifically, FRS has been effective in airport security and matters of national security. However, if a technology is not perfect (or near-perfect), it should not be used by any authoritative power in any manner. Meaning, if a certain technology does not exhibit similar accuracy across all races and genders and an accuracy percentage over some threshold (let’s say 90%), it should not be placed in the hands of law enforcement. Technology will only continue to develop and emerge, and with it, surveillance techniques. As these new technologies emerge from the wellspring, the pool only enlarges, and separating the ‘good’ from the ‘bad’ will quickly become more and more like pulling two water droplets apart.

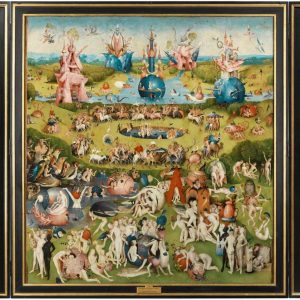

Featured Image Source: Medical News Today

Comments are closed.